Our mission at KooBits is to democratize quality education for young learners, aged 6-12, all over the world. Our flagship Math & Science platforms deliver comprehensive education content in a personalized & interactive way to make learning more engaging and effective for students.

With a learner base of 250,000, we’ve witnessed inspiring stories of students who have not only improved their grades but also developed a genuine passion for Math and Science through practicing on KooBits.

While we’re proud of what we’ve achieved so far, we recognise that there is still a long way to go to fulfil our ultimate vision. We believe that we can accelerate our progress by leveraging AI. As an educational platform for young children, we are committed to using it responsibly, as a force for good.

Introducing StoryScience

One of the ways that we’ve leveraged AI is in the creation of StoryScience. It is a collection of story-driven comics that introduces kids to science concepts in an engaging and approachable way.

Why story-driven comics?

Well, for 6-8 year-olds, grasping scientific concepts and terminologies can be very challenging. For example, terms such as stomach, mucous, saliva, tongue under the human body topic are difficult words for students at this age. Furthermore, some students are still developing their reading habits. Science has to be made less intimidating for them.

Stories have been known to have a profound impact on learning, especially for young children. Research has shown that implicit learning through stories is highly effective. Combining short-form text with rich visual aids, we wanted to create a series of comics which seamlessly blends science concepts into captivating storylines, capturing the imaginations of young readers, while teaching science concepts implicitly.

The Challenge with Comic Production

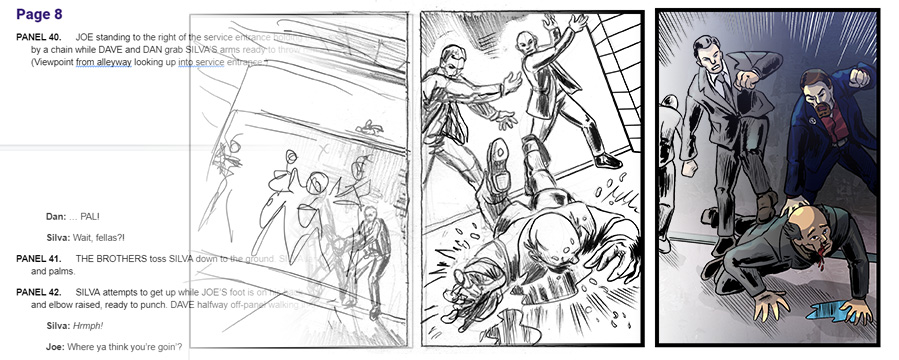

However, creating comics is a resource-intensive task with multiple steps involved:

- Storyline writing to come up with the story and characters

- Penciling to draw the main characters

- Inking to go over the pencil lines and fill up the blank spaces

- Coloring to color it

- Lettering to draw in the dialogue balloons, the dialogues and sound effects.

Streamlining Comic Creation with AI

Back in the day, each step in the process required separate individuals. Thanks to technology, some processes are now automated. Inking can now be done a lot faster using digital painting tools and lettering can be achieved with digital fonts, instead of handwritten text.

Penciling and coloring are still time-consuming processes. Drawing characters in various poses and against different backgrounds can be repetitive and if there are multiple illustrators, maintaining consistency becomes a challenge.

At KooBits, we imagined a possibility where our content experts could simply feed the storyline and key concepts from the education curriculum to our model, and it would be able to generate all the illustrations for it instantly, to create curriculum-aligned comic content.

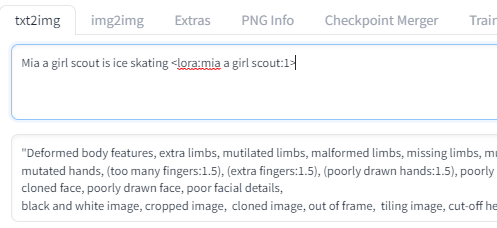

We started exploring Stable Diffusion’s text-to-image diffusion model. This technology is able to take in text-inputs and generate images that match that text description. It works by first generating a noisy image, and then gradually removing the noise, pixel by pixel, until the final output is achieved. The model has been trained with images of various styles – photographic, oil paintings, and of course, comics.

Our plan was to train the model to perform all the repetitive parts of the job which are:

- Auto-generate the characters in a variety of poses and expressions

- Auto-generate comic backgrounds

- Auto-generate the complete comic series

Part 1: Auto-generate the characters in a variety of poses and expressions.

To bring our characters to life, our talented illustrator, Lu Peng, sketched a few variations of each character from different angles and with varying expressions. These illustrations served as valuable character training data for our model.

Using this input, the Stable Diffusion Model worked its magic and generated Mia’s image like below.

We were quite happy with this look and feel, even though it’s not an exact replica of the original illustration. If we wanted it to be more like the original illustration, providing more training data would be necessary.

Armed with this trained model, we can now generate an array of new Mia variations instantly without the need for manual drawing.

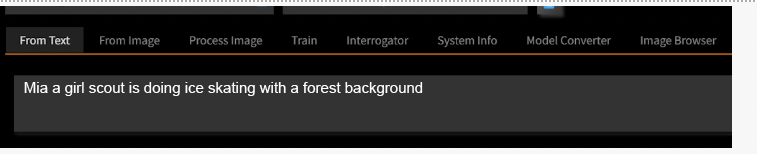

By simply providing a text-based input, such as “Mia ice-skating,” our model promptly generates a corresponding image depicting Mia gracefully gliding with ice-skates on.

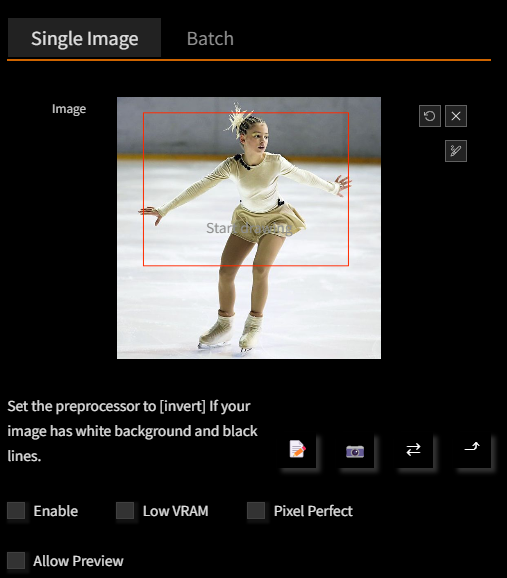

But the possibilities don’t end there. We can take it a step further by combining text and an image input, resulting in a more refined and detailed depiction of Mia.

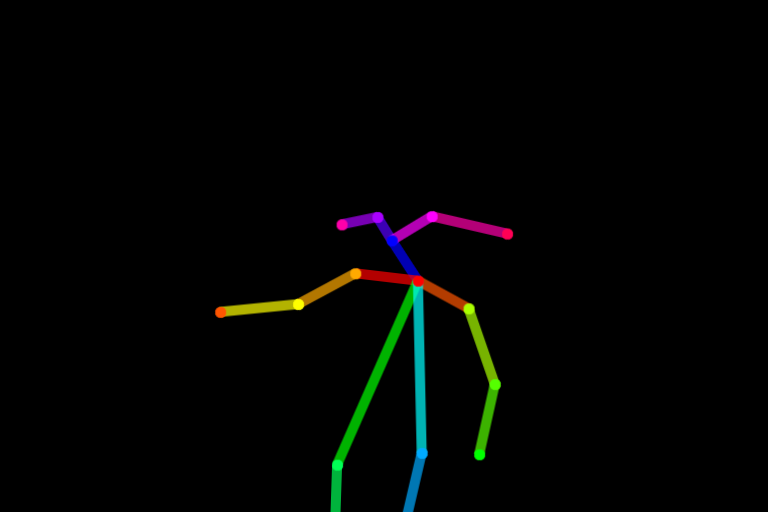

With the input image, the model employs a stick-figure representation to identify the position of the limbs to detect the desired post.

Then it applies the pose to Mia, even incorporating additional elements in the text-input, like the forest background.

And for more unique poses or expressions, we can even just scribble a rough drawing to auto-generate the image we want!

This way, instead of having to draw Mia over and over again manually, our model can instantly generate the desired pose and expression for our character, removing the repetitive penciling, inking, and coloring process.

Just for fun, we also asked the model to generate an image of Mia as a grown-up, and here’s how she looks! How cool is that!

The look and feel of these 3 variations are not entirely there yet though. You can tell that it has a slight anime-look to it, compared to our Mia. This is because Stable-diffusion’s base-model was trained extensively with anime characters and retains that influence. To achieve a more consistent look of grown-up Mia, we will need to provide more character training data to the model.

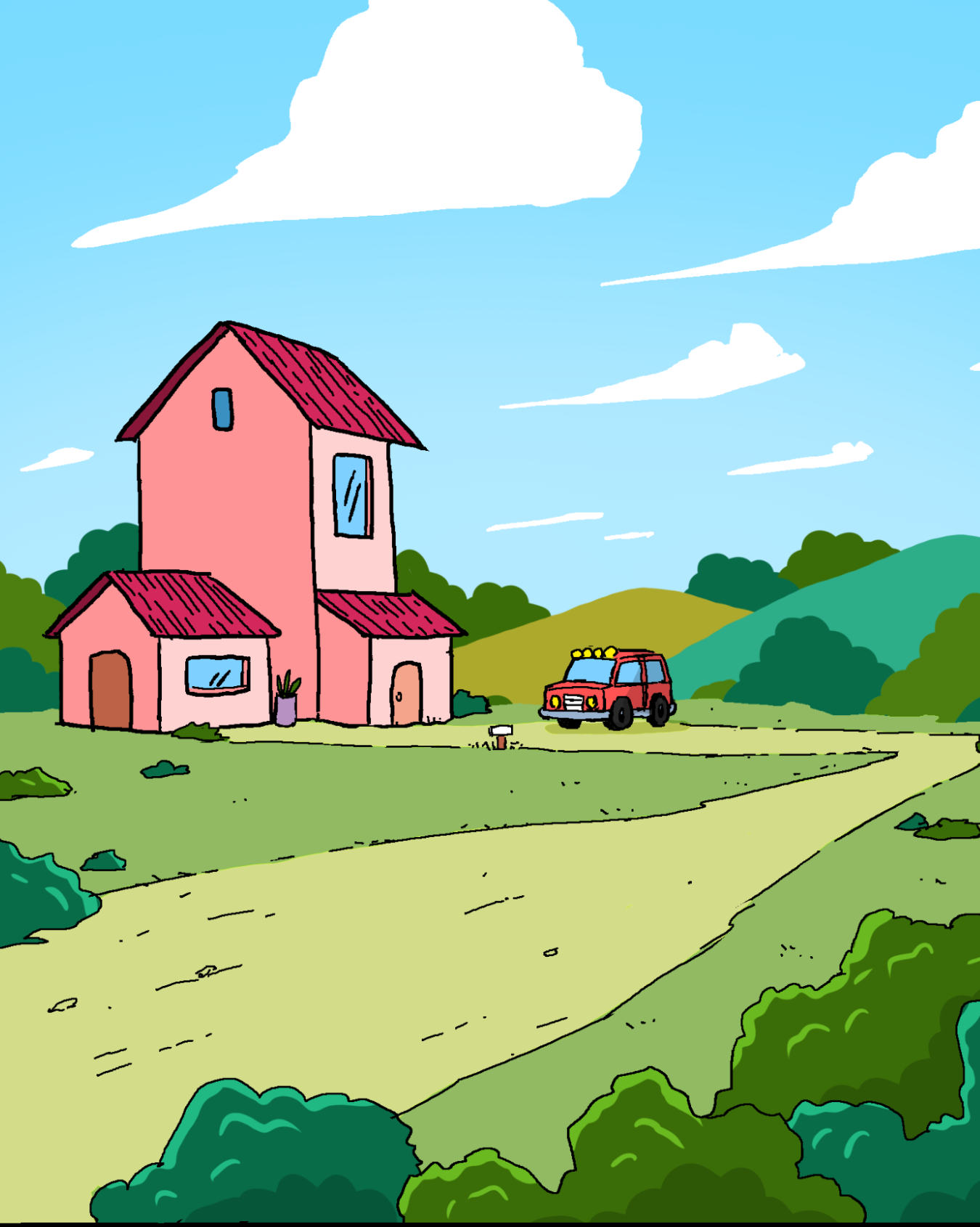

Part 2: Auto-generate comic backgrounds

A huge challenge in the auto-generation of backgrounds was to ensure that it matches the character’s style. As you can see in the ice-skating example, the forest background, while beautiful, doesn’t quite match the character style.

To address this challenge, we considered two options:

Option 1: Provide Background Training Data to the model

We could generate examples of various background types and use them as training data for our model. By exposing the model to our preferred background styles, we would teach it to generate backgrounds that effortlessly match our character style.

Option 2: Leverage Pre-existing Models

Another approach was to use pre-existing models created by other creators. These models come in various styles, such as photography, oil painting, watercolor, and comics. By selecting a pre-existing model that closely aligns with our desired comic character style, we can save time and effort in training our own model.

We chose the second option as it was less resource-intensive. Especially because our science comics would have very different backgrounds (forests, spaceships, human body parts etc), we could save time by simply making adjustments to the pre-existing model’s outputs to achieve the desired style.

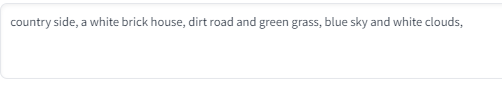

Again, after training, our model is able to take a text-based or scribble-based input to get the final output!

Part 3: Auto-generate the complete comic series

Bringing it all together.

After successfully generating the characters and backgrounds, the time had come to assemble them into a complete comic series. We took the characters and backgrounds we had created and combined them to create our very first comic.

To further enhance the automation process, we fed this final output back into our model. Now, with just the input of a storyboard, our model can automatically generate scenes for us. However, these generated scenes right now are not yet flawless. Our model still cannot identify camera angles and break down the scenes into specific components in a way that truly captivates human readers.

Nevertheless, this is just the beginning. As technology advances, we remain open to the possibilities and excited about the potential improvements in the future.

With the ability to generate high-quality images quickly and easily, KooBits is able to create engaging science comics that capture children’s imaginations and help them learn about science in a fun and accessible way. A single comic that used to take 14 days to produce can now be completed in just a fraction of the time, meaning that we can develop lots of great content for our users quickly.

KooBits’ use of AI to create engaging science comics for children is a testament to the power of technology in education. And we are committed to using it responsibly and as a force for good in educating the young generation.

Excited to check out StoryScience? We’ve included one of our comics you can preview for free! Just click here to access it.

For the full series, start your 7-day FREE trial of KooBits Science to get instant access once we release it.

Written by:

- Celesta: Former teacher turned education content creator, passionate about developing engaging learning experiences for students

- Lu Peng: Animator turned AI-enthusiast, who’s working with AI models to automate his own job.

- Rinita: Growth lead & ex-founder. Passionate about working at the intersection of education & tech to bring quality education to the masses.

- Travis: Marketing & creative maestro, specialising in growth strategy across the user-funnel.

- And of course, Stable Diffusion & LLMs, who’ve helped us create these comics & this article 😉